Blog entry written by Dr. Munawar Hafiz, CEO of OpenRefactory Inc.

This is a long and winding story with a set of lessons in the end. There is a TL;DR in the end for the restless, but the others are encouraged to follow along.

OpenRefactory is in conversations with the alpha-omega project of OpenSSF on an ambitious project to scan all the artifacts in the PyPI repository and work with the open source maintainers to get the security problems fixed. This blog post describes the different approaches OpenRefactory is exploring to collaborate with the maintainers of the open source projects and requests feedback from the community.

At this point, a feasibility study is ongoing to understand and solve two critical issues:

- How to detect high quality security issues without noise?

- How to bring the findings to the open source software maintainers and get them fixed?

Detecting High Quality Security Issues

The first issue is about improving the quality of static analysis tools to generate much lower levels of noise. OpenRefactory’s Intelligent Code Repair (iCR) is appropriately positioned to solve the noise issue. The underlying algorithms improve upon the state-of-the-art of static analysis tools and therefore iCR generates results with a high concentration of true positives and a dramatically low number of false warnings.

OpenRefactory launched its PyPI website in August 2022 to showcase the issues identified in the top 200 projects in the PyPI repository. The website is hosted at: https://pypi.openrefactory.com/

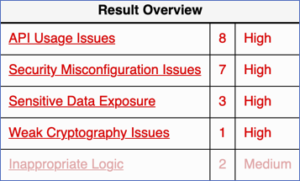

Consider the results found in ansible, the ubiquitous IT automation system. iCR identified only 21 issues as shown in this screenshot. All of them are high to medium severity issues that should need attention from the maintainers. In contrast, another well-known alternative static analysis tool found 3,400 bugs with 98% false warnings and WONTFIX issues. It did not find 40% of the high and medium severity issues that iCR found.

iCR identified only 21 issues as shown in this screenshot. All of them are high to medium severity issues that should need attention from the maintainers. In contrast, another well-known alternative static analysis tool found 3,400 bugs with 98% false warnings and WONTFIX issues. It did not find 40% of the high and medium severity issues that iCR found.

There are three things at play here.

- iCR generates a small number of bugs;

- Most of the results are actual bugs; and

- A number of the bugs are not found by other tools.

All of these make the results of iCR manageable and useful. This ensures that if it is run on a large number of projects as in the PyPI repository, it can give a good return on investment.

Collaborating with Open Source Maintainers

The second issue, sharing the findings, is a lot harder. Open source software maintainers are already over-worked. Surely the high concentration of true positives will help. Still, bugs are very much dependent upon the projects. A bug appreciated by one maintainer may be rejected by another. The priorities of the bugs also differ based on each maintainer’s other priorities.

This is a human problem. To get a perspective on this, on August 31 and September 1, OpenRefactory performed a small empirical study.

Study Setup

OpenRefactory randomly picked 24 of the bugs identified by iCR within the PyPI repository, and submitted pull requests. All the requests were submitted by one engineer at OpenRefactory. All of the pull requests were generated manually. They were filed under a company handle which is used for research purposes.

The pull requests were submitted with two different messages (A/B testing). 16 of them had messages that appeared to be more robotic. 8 of them had messages that appear to come from a human being. They were written in the first person and with small edits that give the messages human-like traits.

Results

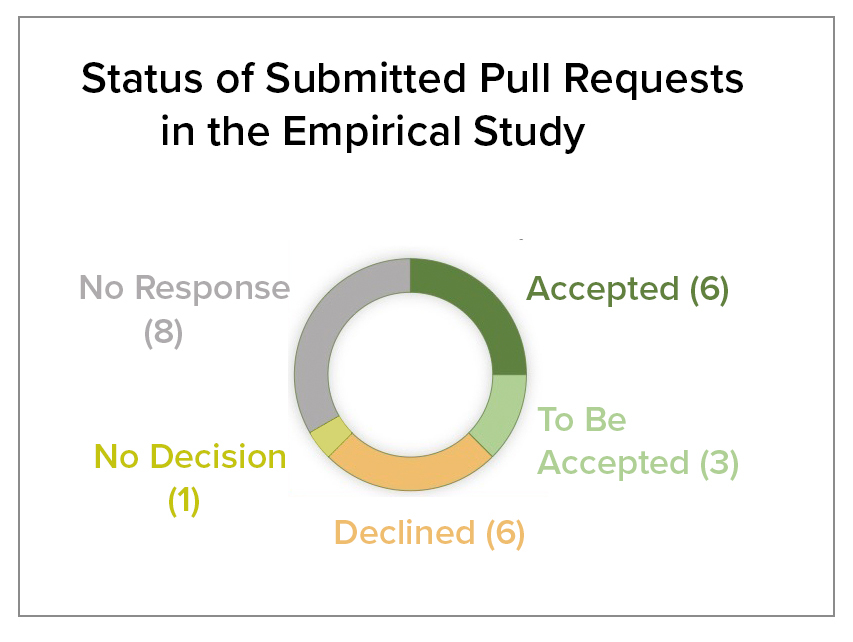

Here are the results.

- 6 pull requests are accepted;

- 3 are in the process of being accepted;

- 6 pull requests are rejected;

- 1 pull request has been commented but not yet resolved;

- 8 pull requests still have no response.

Accepted Pull Requests

Of the 9 pull requests that have been accepted, 4 are about HTTP GET requests being made without a timeout specified, 3 are about CSRF protection being disabled, 1 is about certification validation being disabled while establishing an SSL/TLS connection and 1 is about an unimplemented method raising a NotImplementedError.

Declined Pull Requests

The 6 pull requests that are not accepted gives insight that the same issue may not be applicable for all projects.

- Three are about bugs that are not applicable to the project because of how the application is designed.

– The design of the application may not provide the attack surface for an issue on disabling CSRF protection (“I’m not super concerned about the security implications of someone being able to make a query to the server. There isn’t any authentication implemented at the moment anyway.”)

– A temporary file creation with the unsafe mktemp API method may be done as a part of a design decision because “the temporary file has to be opened and written by git in a separate process, and is later renamed.”

– Because of backward compatibility, iCR’s suggestion to use ftplib.FTP_TLS in place of ftplib.FTP is ignored. In this case, the patch was also poorly crafted. - A couple of logical bugs about list length check are ignored because they are not in a used part of the code any more. This patch may also be improved and a maintainer provided a helpful suggestion.

- A proper project-specific process should be followed to file the bugs (“please file a ticket the next time first”).

Discussion

In two cases out of the 16 pull requests that have seen some actions/comments, the maintainer pointed out a concern about a bot generating the pull request. Curiously, one of them had the message that was more structural, and the other one had the more human-like message. Does that mean that only the quality of the pull request matters, and not the messaging? There is not sufficient evidence to draw a conclusion.

Also the two messages that were used were not very different either. Security researcher Jonathan L. files automated pull requests, but his messaging is more detailed. That being said, the acceptance rate of those pull requests are not encouraging (perhaps because of the kind of bugs targeted). For the case of downloading artifacts over an insecure protocol, more fixes were accepted (~40%), but for others the acceptance rate is about 5%.

In the end, the content matters. If the pull request is about an issue that the maintainers care about, it will be accepted irrespective of the messaging. But the same issue that Peter thinks is important for his project may not be applicable for Paul in another project. The CSRF protection disabling issue is accepted by 3 projects but is rejected in one project and is hanging (a comment is made but no actions) in another one. This means that the filing has value, but not the same value for all projects.

If the pull requests are targeting to patch a known vulnerability, then the patches may make a common appeal to every project maintainers if the patch is done in the right way. In the case of iCR’s bugs, they are all new bugs in the project. It is more about creating a discussion about whether the fix is good and appropriate rather than the patch itself.

Perhaps because of this, maybe the right mechanism is to not file pull requests. The community as a whole is still in the process of figuring out the right way (see the Guidance for Security Researchers to Coordinate Vulnerability Disclosures with Open Source Software Projects). Another approach is to file security advisories and collaborate to fix the vulnerabilities in a private fork. OpenRefactory is now experimenting with this approach. That will be featured in another blog post.

TL;DR

For the restless, we encourage you to actually read the post again. There are many interesting nuggets.

- There is a lot of security hardening needed in all open source projects;

- OpenRefactory’s Intelligent Code Repair (iCR) provides a high concentration of actual bugs and therefore can be used to scan a huge amount of open source projects;

- About 40% of the manually reported bugs have been accepted by the maintainers with very little effort from both sides;

- Automating the pull requests approach is not yet desirable by all maintainers. They can apply for bulk patching a known vulnerability, but the bugs generated by iCR are new bugs and there needs to be handholding to carry these through;

- Since the number of bugs is very small compared to the number of bugs reported by the other tools, this may still scale to a large amount of projects.

Notes:

- The image at the top is from xkcd: https://xkcd.com/2347/

- The pull requests that are submitted are available here.

- There is a Tweet from one maintainer criticizing the pull requests of OpenRefactory assuming that they were generated by a Bot. The engineer involved in running the study did not follow the protocol and sent a few direct messages to some maintainers. Clearly this was annoying. We apologize for any inconveniences caused by this. The pull requests were not generated by a bot as a company policy. OpenRefactory is running this study precisely to discover and formulate an approach to communicate with the maintainers. Pushing the boundary sometimes comes at a cost.

- There is a virtual conference of open source project maintainers that is being organized by OpenRefactory’s CEO Munawar Hafiz and other contributors from the OpenSSF. This issue will be discussed in that forum.

- Thanks to Michael S. and Jonathan L. for helpful comments.

OpenRefactory is collaborating with OpenSSF to experiment on different approaches to work with the open source maintainers more effectively. We welcome community input and discussion on the best way to do it. Please connect with me by email (munawar __a_t_ openrefactory __suffix_for_companies) or catch me in the working group meetings, or shoot me a message on OpenSSF slack.